Probabilistic Representations as Building Blocks for Higher-Level Vision

Probabilistic Representations as Building Blocks for Higher-Level Vision

Abstract

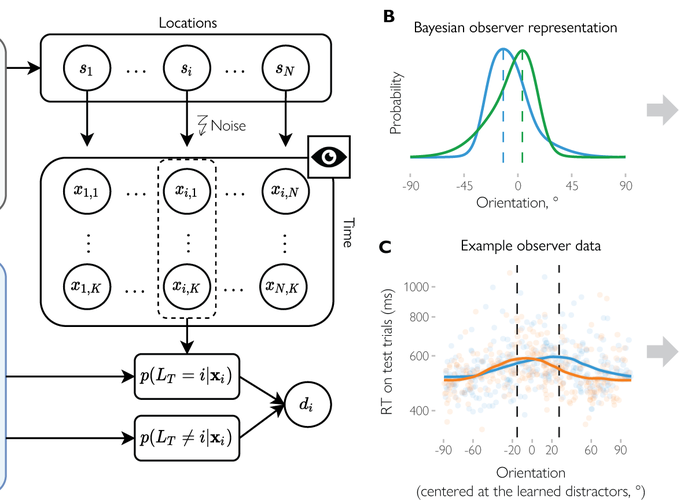

Current theories of perception suggest that the brain represents features of the world as probability distributions, but can such uncertain foundations provide the basis for everyday vision? Perceiving objects and scenes requires knowing not just how features (e.g., colors) are distributed but also where they are and which other features they are combined with. Using a Bayesian computational model, we recovered probabilistic representations used by human observers to search for odd stimuli among distractors. Importantly, we found that the brain integrates information between feature dimensions and spatial locations, leading to more precise representations compared to when information integration is not possible. We also uncovered representational asymmetries and biases, showing their spatial organization and explain how this structure argues against ‘summary statistics’ accounts of visual representations. Our results confirm that probabilistically encoded visual features are bound with other features and to particular locations, providing a powerful demonstration of how probabilistic representations can be a foundation for higher-level vision.