Motion direction is represented as a bimodal probability distribution in the human visual cortex

Motion direction is represented as a bimodal probability distribution in the human visual cortex

Abstract

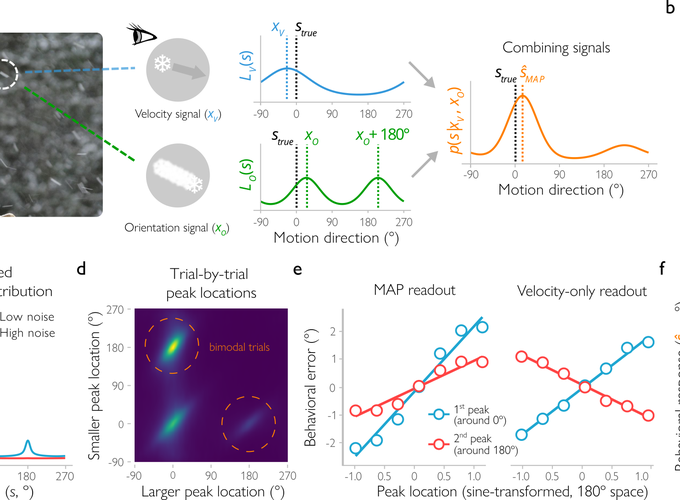

Humans infer motion direction from noisy sensory signals. We hypothesize that to make these inferences more precise, the visual system computes motion direction not only from velocity but also spatial orientation signals – a ‘streak’ created by moving objects. We implement this hypothesis in a Bayesian model, which quantifies knowledge with probability distributions, and test its predictions using psychophysics and fMRI. Using a probabilistic pattern-based analysis, we decode probability distributions of motion direction from trial-by-trial activity in the human visual cortex. Corroborating the predictions, the decoded distributions have a bimodal shape, with peaks that predict the direction and magnitude of behavioral errors. Interestingly, we observe similar bimodality in the distribution of the observers’ behavioral responses across trials. Together, these results suggest that observers use spatial orientation signals when estimating motion direction. More broadly, our findings indicate that the cortical representation of low-level visual features, such as motion direction, can reflect a combination of several qualitatively distinct signals.