Demixing Model: A Normative Explanation for Inter-Item Biases in Memory and Perception

Demixing Model: A Normative Explanation for Inter-Item Biases in Memory and Perception

Abstract

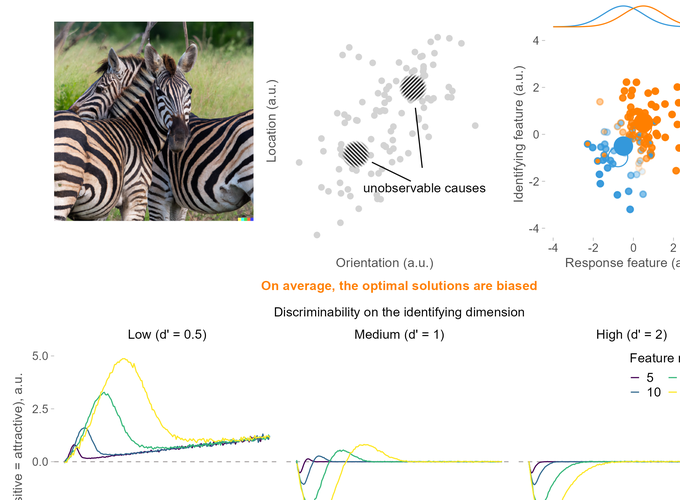

Many studies in perception and in the working memory literature demonstrate that human observers systematically deviate from the truth when estimating the features of one item in the presence of another. Such inter-item or contextual biases are well established but lack a coherent explanation at the computational level. Here, I propose a novel normative model showing that such biases exist for any observer striving for optimality when trying to infer the features of multiple similar objects from a mixture of sensory observations. The ‘demixing’ model predicts that bias strength and direction would vary as a function of the amount of sensory noise and the similarity between items. Crucially, these biases exist not because of the prior knowledge in any form, but simply because the biased solutions to this inference problem are more probable than unbiased ones, counter to the common intuition. The model makes novel predictions about the effect of discriminability along the dimension used to select the item to report (e.g., spatial location) and the relative amount of sensory noise. Although the model is consistent with previously reported data from human observers, more carefully controlled studies are needed for a stringent test of its predictions. The strongest point of the `demixing’ model, however, is that it shows that interitem biases are inevitable when observers lack perfect knowledge of which stimuli caused which sensory observations, which is, arguably, always the case.